Why Facebook’s business model won’t work in the long run

People are spending nearly an hour every day scrolling through Facebook status updates, liking and commenting on posts. Many of them (66% in the U.S.) experience their news feed not just as a place to share and experience entertaining cat videos and pictures of a friend’s holiday trip. But as primary source for consuming news facts and staying up to date on what’s happening in the world. This simple fact comes with a serious responsibility for Facebook which uses algorithms to decide what does and doesn’t do appear in your news feed.

These obscure algorithms influence what you see and don’t see and can therefore lead to dangerous shortsighted world views.

Bubble of comfort

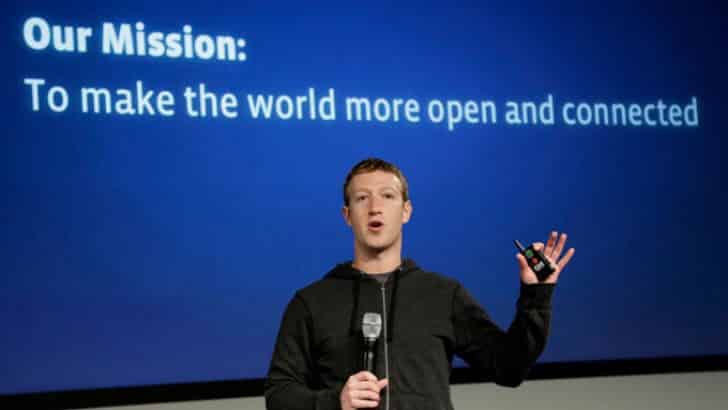

Facebook’s mission presumes that they want to make the world more transparent. But one of the most important elements of being transparent is getting in touch with different perspectives. Perspectives which can create feelings of discomfort.

Let’s take one step backward. Facebook uses algorithms to keep you more engaged on the platform. Based on many secret influencing factors, the algorithm decides what content you will not see in your news feed.

Comfort is key. You will not stay on Facebook for long if your news feed gets filled with uncomfortable stories which don’t match your current sense of reality. In addition, brands won’t be too happy when their ads get connected to discomfort. Therefore these obscure algorithms create a comfortable bubble with self-fulfilling content to generate more engagement. Quite a contradictory to being transparent.

Transparency and profit don’t match

So what can Facebook do? Instead of their struggle to identify both genuine and fake news, could they make their algorithm more transparent? By providing insights on what the secret influencing factors are and how they work? Unfortunately, this is wishful thinking, because of at least 3 reasons:

- Understanding the algorithms with its enormous amount of influencing factors and their correlations, is probably close to impossible for an average human being. Let alone the sympathetic idea of giving users the ability to control those (millions of?) influencing factors…

- Facebook wants to be perceived as an unbiased neutral publishing platform. Providing insights on how the algorithms work will crush this perception.

- Competitors will highly appreciate the algorithm insights for their own benefit.

My suggestion to Facebook would be to either remove their news feed algorithm or eliminate the word ‘open’ from their dictionary.

Summary

- Open equals transparency

- Transparency equals discomfort

- Discomfort equals disengagement

- Disengagement equals No Ads

- No Ads equals No profit

Facebook’s business model and mission don’t match and won’t survive in the long run.

What do you think?

Bijdrage van Dirk Wolbers